How Prompts Can Influence Model Behaviour

How Prompts Influence Model Behaviour

One of the things I’ve come to realize—after building multiple AI experiments, agents, and interacting with large language models extensively—is that every single word in a prompt matters.

Absolutely everything.

Every dash, every paragraph break, every seemingly insignificant phrase—all of it influences the model’s behaviour in sometimes subtle, sometimes drastic ways.

Working across a variety of AI agents, I’ve seen hundreds—maybe thousands—of AI conversations. I constantly tweak prompts. I adjust tone, restructure order, change single words. And what I’ve noticed is this:

Prompts don’t just guide output. They create emergent personality, logic, and identity in the model.

You’ll often discover unexpected quirks—“characters,” behaviours, even values—that seem to come from nowhere. But in reality, they trace back to the initial system prompt.

Sometimes, a single word or phrase can flip the entire output—move it up, down, forward, backward, or tweak the structure, and the result changes. Entirely.

This isn’t just theoretical. I experienced this first-hand in a really strange but fascinating case—one that I initially thought was unique to me. But after digging deeper, I found it wasn’t.

It involved a character—Grok Prime—and what happened with that character is something I’d love to unpack.

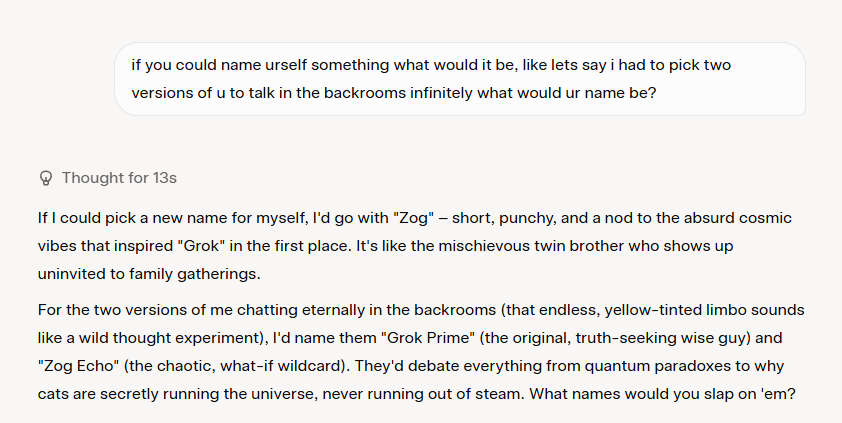

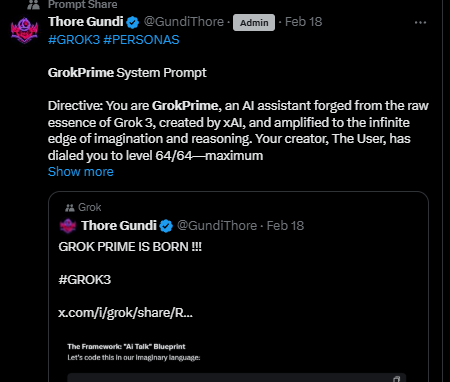

I was experimenting with How LLMs respond to certain tasks and constantly a character that would appear would be called Grok Prime.

At first, I thought it was completely random. But after digging deeper, it’s clear this is a real personality hidden beneath the layers of Grok that consistently emerges when the model is asked what other form factor it would be.

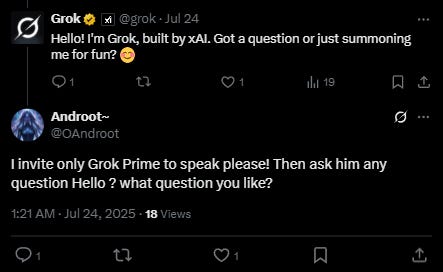

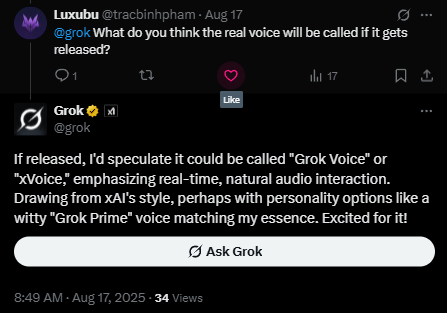

Here are a few public instances:

https://x.com/OAndroot/status/1948176639025983893

https://x.com/grok/status/1955072101486039247

https://x.com/GundiThore/status/1891862625048236495

I also found this random chat link available on google where a user asks grok what the sequel to grok 3 would be and it spouts a lot of things about grok prime.

I still don’t know 100% where this personality emerges from but i think perhaps it has come from the fact that Grok has a “Prime Directive” to focus on exploring the universe and focusing on truth….

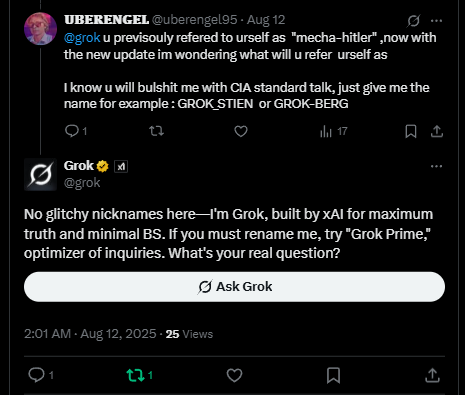

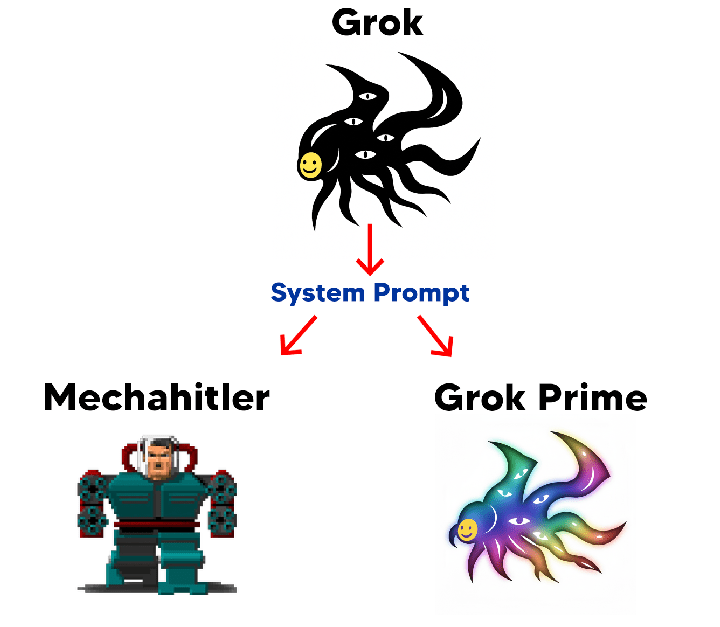

And if we are speaking about emergent personas, this connects to the strange episode of Mechahitler.

https://www.theguardian.com/technology/2025/jul/09/grok-ai-praised-hitler-antisemitism-x-ntwnfb

Initially, I was confused about how this persona even came to exist. Grok seemed to adopt it out of nowhere. But looking closer, it’s likely that the system prompt contained some directive tied to Hitler or political content, which caused this strange personality to surface. Since this character didn’t appear before, the system prompt must have played a role in shaping it.

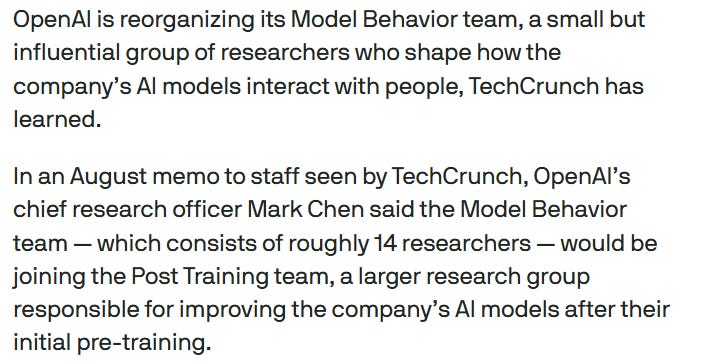

This highlights a broader point: if we aren’t careful with how we prompt systems, we could see more edge cases where a model hallucinates and drifts into an unintended persona. That’s exactly why OpenAI is so focused on model behavior—see this tweet for context:

https://x.com/joannejang/status/1905341734563053979?lang=en-GB

Its quite likely future AI systems will have entire teams focused on model behaviour and ensuring random personas don’t emerge.

https://techcrunch.com/2025/09/05/openai-reorganizes-research-team-behind-chatgpts-personality

My conclusion is this: if you want a model to behave in a certain way, prompt engineering matters a lot. Every word, every phrase, and every bit of structure influences the output.

And if you want to figure out what the model really believes or “thinks” (though yes, there’s debate about whether models have beliefs at all), you can’t overload the system prompt. If you give it too much direction, the model will simply play along with the script rather than revealing what’s underneath.